1. Virtualized Infrastructure Manager¶

1.1. Prerequisites¶

Access to fabric management nodes

Basic services are available and configured

dhcpd

tftpd

httpd

coredns

registry

monitor

1.1.1. Requirements¶

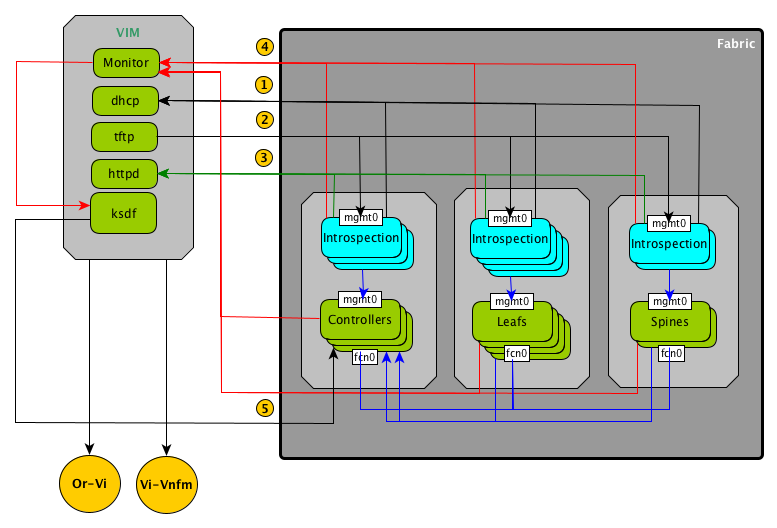

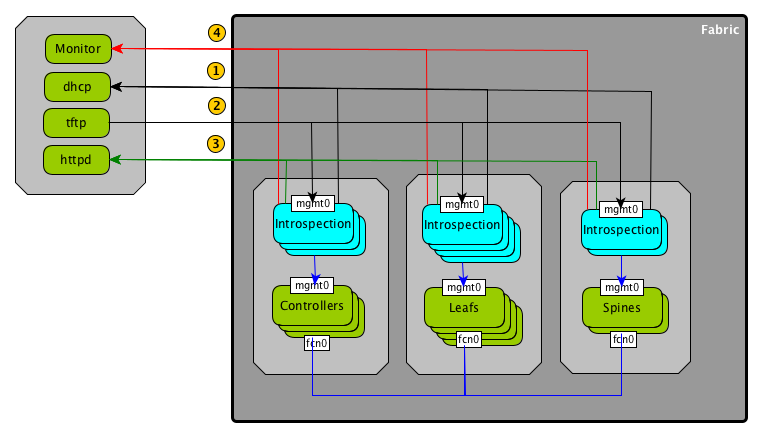

Basic connectivity model using OOB NWs

Connectivity between DC Services and Nodes is available

1.2. Overview¶

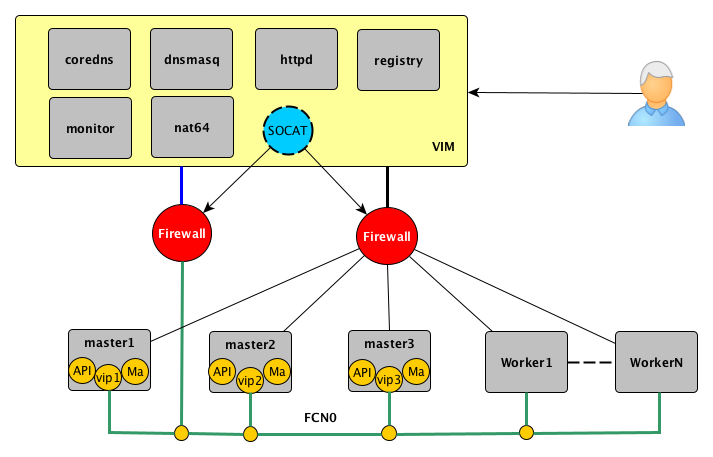

The VIM is responsible for controlling and managing the NFV infrastructure (NFVI) compute, storage, and network resources, usually within one operator’s infrastructure domain. The VIM is a specific part of the MANO framework, but can have multiple instances in a network. There are two general kinds of instances. Some manage multiple types of NFVI resources, or they can be specialized to handle a certain type.

Management aspects concerning virtualized resources, including information, provisioning, reservation, capacity, performance and fault management. This scope of management concerns to the functionality produced by the VIM and exposed over the Or-Vi and Vi-Vnfm reference points.

1.2.1. Stages¶

Fabric livecycle stages assume that the infrastructure is available and basic VIM services are available and reachable via OOB by all nodes.

Introspection

Node install including Basic Fabric and Keepalived static pods on all nodes

Create initial cluster

Decommission bootstrap and install the third controller

Add workers to the cluster

Deploy fabric services

Node Update

Node Decommissioning

1.3. Quick Start¶

If VIM is remote located, it is possible to create a L3 VXLAN tunnel between VIM and a node direct conneted to the fabric

Note

The underlay network could be either ipv4 or ipv6

On the VIM node

ip link add name vxlan42 type vxlan id 42 dev eth1 remote 2602:807:900e:141:0:1866:dae6:4674 local 2602:807:9000:8::10b dstport 4789

ip -6 address add fd02:9a04::1/64 dev vxlan42

ip link set up vxlan42

On the direct connected node:

ip link add name vxlan42 type vxlan id 42 dev mgmt0 remote 2602:807:9000:8::10b local 2602:807:900e:141:0:1866:dae6:4674 dstport 4789

ip -6 address add fd02:9a04::2/64 dev vxlan42

ip link set up vxlan42

Add a static route to svc0 network via VXLAN tunnel

ip -6 route add fd02:9a01::/64 via fd02:9a04::2 dev vxlan42

1.3.1. VIM node¶

Get the code

mkdir vim && cd vim

git clone --recurse-submodules -j8 https://gitlab.stroila.ca/slg/ocp/cluster.git

pushd cluster

git submodule update --remote

popd

Install basic services in the VIM node

pushd cluster

make clean && make deps

popd

1.3.2. PXE infrastructure nodes¶

Use Bios or iPxe method

1.3.3. Boostrap node¶

Patch etcd after api server is up

oc patch etcd cluster -p='{"spec": {"unsupportedConfigOverrides": {"useUnsupportedUnsafeNonHANonProductionUnstableEtcd": true}}}' --type=merge --kubeconfig /etc/kubernetes/kubeconfig

1.3.4. Master nodes¶

Ignite the two masters

curl http://registry.ocp.labs.stroila.ca/cfg/master.ign > /boot/ignition/config.ign

touch /boot/ignition.firstboot

reboot

1.3.5. Conver Bootstrap into Master¶

Ignite the third master

curl http://registry.ocp.labs.stroila.ca/cfg/master.ign > /boot/ignition/config.ign

touch /boot/ignition.firstboot

reboot

1.3.6. Approuve CSR’s¶

From VIM node

oc get csr --kubeconfig /opt/ocp/assets/auth/kubeconfig

For each “Pending” csr:

oc adm certificate approve <csr name> --kubeconfig /opt/ocp/assets/auth/kubeconfig

1.3.7. Verify the Installation¶

Verify cluster operators

[root@node-f01fafce5ded infra]# oc get co --kubeconfig /opt/ocp/assets/auth/kubeconfig

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE MESSAGE

authentication 4.9.23 True False False 159m

baremetal 4.9.23 True False False 3h7m

cloud-controller-manager 4.9.23 True False False 3h23m

cloud-credential 4.9.23 True False False 4h8m

cluster-autoscaler 4.9.23 True False False 3h7m

config-operator 4.9.23 True False False 3h9m

console 4.9.23 True False False 162m

csi-snapshot-controller 4.9.23 True False False 3h8m

dns 4.9.23 True False False 3h6m

etcd 4.9.23 True False False 3h6m

image-registry 4.9.23 True False False 171m

ingress 4.9.23 True False False 165m

insights 4.9.23 True False False 168m

kube-apiserver 4.9.23 True False False 165m

kube-controller-manager 4.9.23 True False False 3h6m

kube-scheduler 4.9.23 True False False 3h6m

kube-storage-version-migrator 4.9.23 True False False 3h9m

machine-api 4.9.23 True False False 3h7m

machine-approver 4.9.23 True False False 3h7m

machine-config 4.9.23 True False False 166m

marketplace 4.9.23 True False False 3h7m

monitoring 4.9.23 True False False 164m

network 4.9.23 True False False 3h10m

node-tuning 4.9.23 True False False 3h7m

openshift-apiserver 4.9.23 True False False 165m

openshift-controller-manager 4.9.23 True False False 3h6m

openshift-samples 4.9.23 True False False 166m

operator-lifecycle-manager 4.9.23 True False False 3h7m

operator-lifecycle-manager-catalog 4.9.23 True False False 3h7m

operator-lifecycle-manager-packageserver 4.9.23 True False False 169m

service-ca 4.9.23 True False False 3h9m

storage 4.9.23 True False False 3h9m

Verify the cluster version

[root@node-f01fafce5ded infra]# oc get clusterversion --kubeconfig /opt/ocp/assets/auth/kubeconfig

NAME VERSION AVAILABLE PROGRESSING SINCE STATUS

version 4.9.23 True False 163m Cluster version is 4.9.23

Verify the cluster nodes

[root@node-f01fafce5ded infra]# oc get nodes -o wide --kubeconfig /opt/ocp/assets/auth/kubeconfig

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

node-90b11c4c8bf6.montreal-317.slabs.stroila.ca Ready master,worker 3h13m v1.22.3+b93fd35 fd53:f:a:b:0:90b1:1c4c:8bf6 <none> Red Hat Enterprise Linux CoreOS 49.84.202202230006-0 (Ootpa) 4.18.0-305.34.2.el8_4.x86_64 cri-o://1.22.1-21.rhaos4.9.git74a7981.2.el8

node-b8ca3a6f3d48.montreal-317.slabs.stroila.ca Ready master,worker 3h13m v1.22.3+b93fd35 fd53:f:a:b:0:b8ca:3a6f:3d48 <none> Red Hat Enterprise Linux CoreOS 49.84.202202230006-0 (Ootpa) 4.18.0-305.34.2.el8_4.x86_64 cri-o://1.22.1-21.rhaos4.9.git74a7981.2.el8

node-b8ca3a6f7eb8.montreal-317.slabs.stroila.ca Ready master,worker 3h13m v1.22.3+b93fd35 fd53:f:a:b:0:b8ca:3a6f:7eb8 <none> Red Hat Enterprise Linux CoreOS 49.84.202202230006-0 (Ootpa) 4.18.0-305.34.2.el8_4.x86_64 cri-o://1.22.1-21.rhaos4.9.git74a7981.2.el8

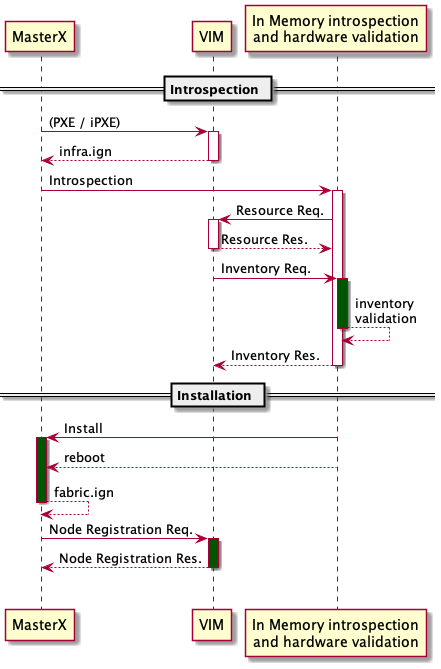

1.4. Node Introspection & Installation¶

The introspection is a function of the VIM performed by in memory system boot using nested ignition which calls run-coreos-installer service. If it is successful, the run-coreos-installer service will install coreos on disk using a custom ignition and report back to the monitoring function via REST. If not will report the information about the faulty hardware.

1.4.1. HardWare discovery/inspection library¶

1.4.1.1. Functions implemented:¶

getMemory()

getCpu()

getBlockStorage()

getTopology()

getNetwork()

getLocalAddresses()

getPci()

getDevices()

getGPU()

getChassis()

getBaseboard()

getProduct()

getSerialization()

Report example:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 | processor core #0 (2 threads), logical processors [0 8]

processor core #1 (2 threads), logical processors [1 9]

processor core #2 (2 threads), logical processors [2 10]

processor core #3 (2 threads), logical processors [3 11]

processor core #4 (2 threads), logical processors [4 12]

processor core #5 (2 threads), logical processors [5 13]

processor core #6 (2 threads), logical processors [6 14]

processor core #7 (2 threads), logical processors [7 15]

capabilities: [msr pae mce cx8 apic sep

mtrr pge mca cmov pat pse36

clflush dts acpi mmx fxsr sse

sse2 ss ht tm pbe syscall

nx pdpe1gb rdtscp lm constant_tsc arch_perfmon

pebs bts rep_good nopl xtopology nonstop_tsc

cpuid aperfmperf pni pclmulqdq dtes64 monitor

ds_cpl vmx smx est tm2 ssse3

sdbg fma cx16 xtpr pdcm pcid

dca sse4_1 sse4_2 x2apic movbe popcnt

tsc_deadline_timer aes xsave avx f16c rdrand

lahf_lm abm 3dnowprefetch cpuid_fault epb cat_l3

cdp_l3 invpcid_single pti intel_ppin ssbd ibrs

ibpb stibp tpr_shadow vnmi flexpriority ept

vpid fsgsbase tsc_adjust bmi1 hle avx2

smep bmi2 erms invpcid rtm cqm

rdt_a rdseed adx smap intel_pt xsaveopt

cqm_llc cqm_occup_llc cqm_mbm_total cqm_mbm_local dtherm arat

pln pts md_clear flush_l1d]

#########################################################################

memory (48GB physical, 48GB usable)

#########################################################################

block storage (2 disks, 2TB physical storage)

dm-0 SSD (931GB) Unknown [@unknown (node #0)] vendor=unknown

sda SSD (932GB) SCSI [@pci-0000:00:1f.2-ata-1 (node #0)] vendor=ATA model=CT1000MX500SSD4 serial=2007E28A19AF WWN=0x500a0751e28a19af

sda1 (384MB) [ext4] mounted@/boot

sda2 (127MB) [vfat] mounted@/boot/efi

sda3 (1MB)

sda4 (932GB)

#########################################################################

topology SMP (1 nodes)

node #0 (8 cores)

L1i cache (32 KB) shared with logical processors: 0,8

L1i cache (32 KB) shared with logical processors: 1,9

L1i cache (32 KB) shared with logical processors: 2,10

L1i cache (32 KB) shared with logical processors: 3,11

L1i cache (32 KB) shared with logical processors: 4,12

L1i cache (32 KB) shared with logical processors: 5,13

L1i cache (32 KB) shared with logical processors: 6,14

L1i cache (32 KB) shared with logical processors: 7,15

L1d cache (32 KB) shared with logical processors: 0,8

L1d cache (32 KB) shared with logical processors: 1,9

|

1.4.2. Configuration¶

1.4.2.1. FCCT¶

fcct, the Fedora CoreOS Config Transpiler, is a tool that produces a JSON Ignition file from the YAML FCC file. Using the FCC file, an FCOS machine can be told to create users, create filesystems, set up the network, install systemd units, and more.

Create the FCCT definition file automated_install.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 | variant: fcos

version: 1.1.0

systemd:

units:

- name: run-coreos-installer.service

enabled: true

contents: |

[Unit]

After=network-online.target

Wants=network-online.target

Before=systemd-user-sessions.service

OnFailure=emergency.target

OnFailureJobMode=replace-irreversibly

[Service]

RemainAfterExit=yes

Type=oneshot

ExecStart=/usr/local/bin/run-coreos-installer

ExecStartPost=/usr/bin/systemctl --no-block reboot

StandardOutput=kmsg+console

StandardError=kmsg+console

[Install]

WantedBy=multi-user.target

storage:

files:

- path: /home/core/install

overwrite: true

contents:

inline: |

# This will trigger rhcos to be installed on disk

- path: /etc/hosts

overwrite: true

# A basic Ignition config that will enable autologin on tty1

contents:

inline: |

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

{{VIM6}} vim.lab.stroila.ca vim

- path: /usr/local/bin/run-coreos-installer

overwrite: true

contents:

source: http://[{{VIM6}}]/ocp/run-coreos-installer.gz

compression: gzip

verification:

hash: sha512-{{run-coreos-installer}}

mode: 0755

- path: /usr/local/bin/ethtool

overwrite: true

# Deploys this tool by copying an executable from an https link. The

# file is compressed with gzip.

contents:

source: http://[{{VIM6}}]/ocp/ethtool.gz

compression: gzip

verification:

# The hash is sha512- followed by the 128 hex characters given by

# the sha512sum command. Append "sha512-"

hash: sha512-{{ETHTOOL}}

# Makes the tool file readable and executable by all.

mode: 0555

- path: /home/core/inventory

overwrite: true

contents:

source: http://[{{VIM6}}]/ocp/inventory.gz

compression: gzip

verification:

hash: sha512-{{INVENTORY}}

mode: 0555

- path: /usr/local/bin/dmidecode

overwrite: true

contents:

source: http://[{{VIM6}}]/ocp/dmidecode.gz

compression: gzip

verification:

hash: sha512-{{DMIDECODE}}

mode: 0555

|

Note

The sha512 verification is from the original file not the compressed resource

Pull the container for fcct

podman pull quay.io/coreos/fcct

Run fcct on the FCC file

podman run -i --rm quay.io/coreos/fcct:latest --pretty --strict < ./docs/source/config/automated_install.yaml > automated_install.ign

1.4.3. Static Pods¶

Static pods are included in the fabric ignition and are role and hardware specific.

keepalived static pod will be deployed in all the controllers in an active-active-active configuration that will monitor the apiserver.

1.4.4. Node install¶

The Node installation is triggered via nested ignition upon succesful node introspection

1.5. Create initial cluster¶

Select one of the controllers to act as a temporary deployment node.

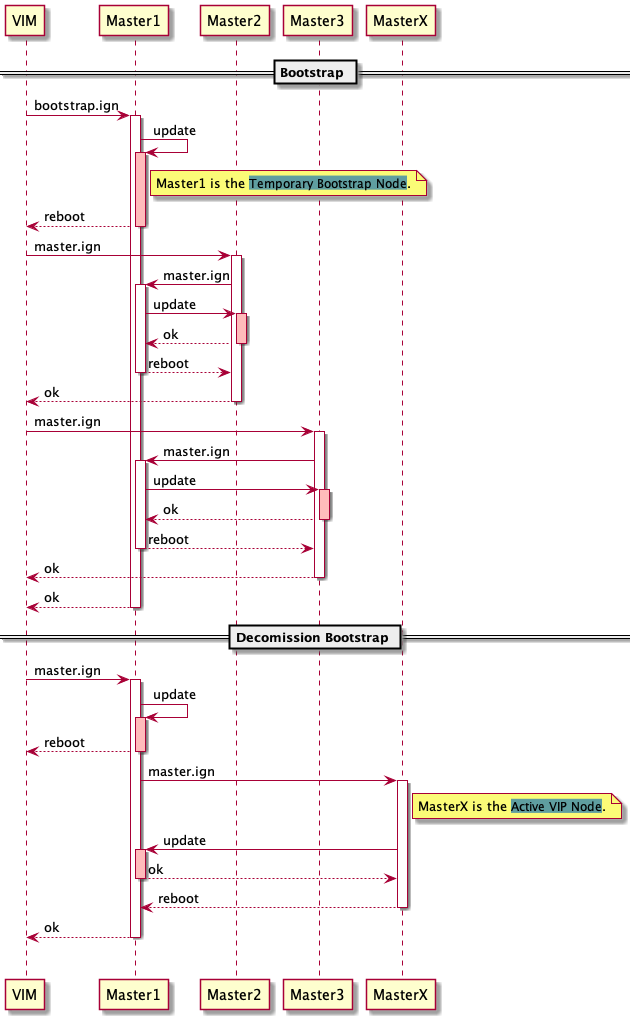

Sequence diagram describes how—and in what order—a group of objects works together

The desired state

Note

The bootstrap stage can be executed in memory and after successful completion execute the OS install using a master ignition.

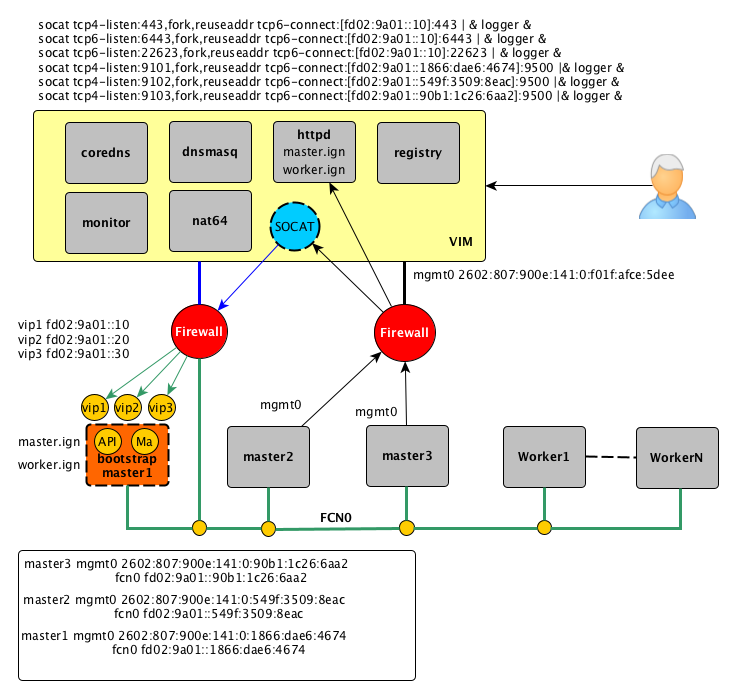

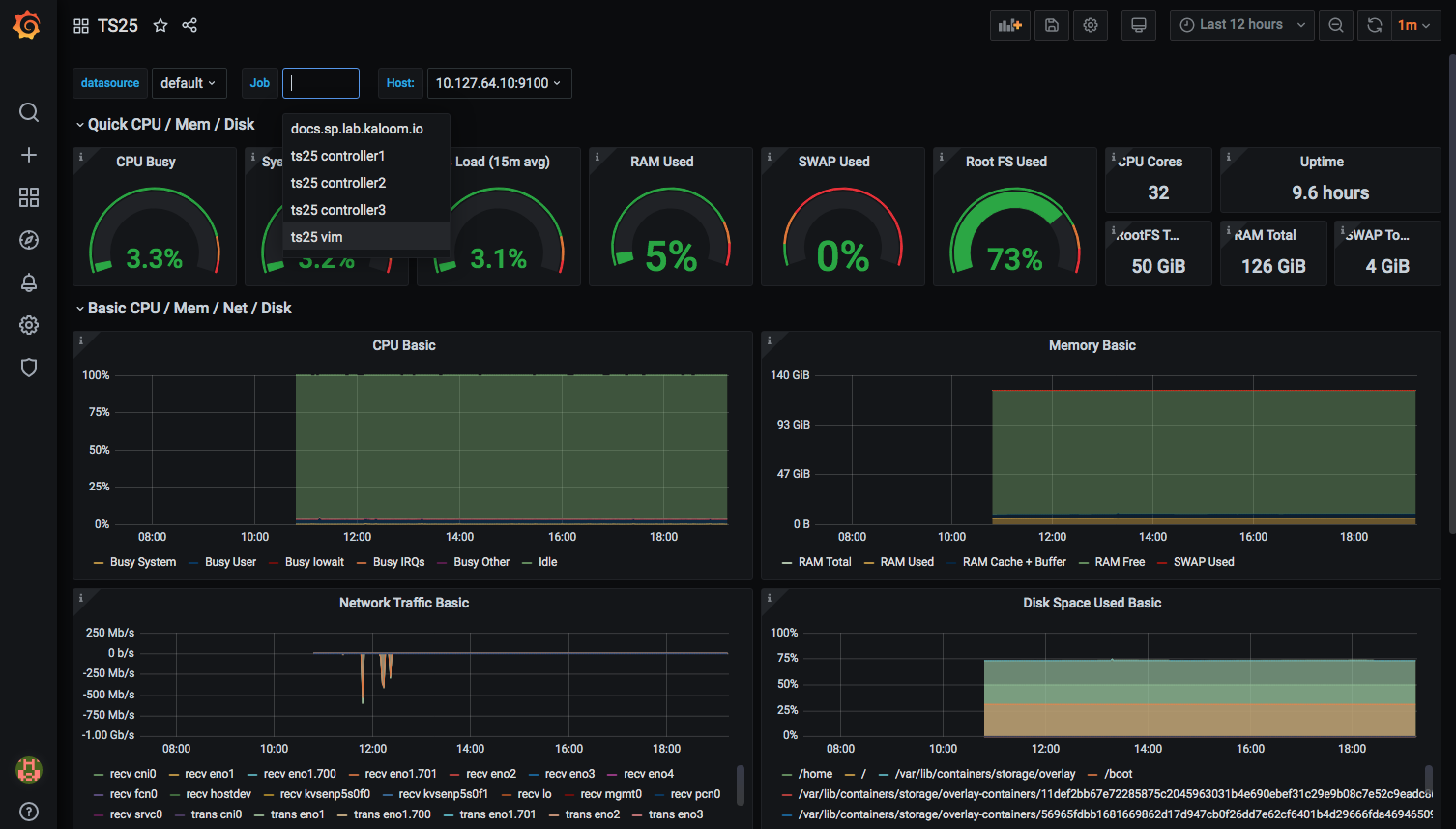

The cluster monitoring can be access from ipv4 network dashbord service via socat node_exporter running over ipv6

1.5.1. Socat¶

The socat utility is a relay for bidirectional data transfers between two independent data channels.

There are many different types of channels socat can connect, including:

Files

Pipes

Devices (serial line, pseudo-terminal, etc)

Sockets (UNIX, IP4, IP6 - raw, UDP, TCP)

SSL sockets

Proxy CONNECT connections

File descriptors (stdin, etc)

The GNU line editor (readline)

Programs

Combinations of two of these

There are many ways to use socat effectively. Here are a few examples:

TCP port forwarder (one-shot or daemon)

External socksifier

Tool to attack weak firewalls (security and audit)

Shell interface to Unix sockets

IP6 relay

Redirect TCP-oriented programs to a serial line

Logically connect serial lines on different computers

Establish a relatively secure environment (su and chroot) for running client or server shell scripts with network connections

Here we use socat to forward between IPv6 and IPv4 networks. The reason is to temporarly use VIM server as a port forwarder to reach bootstrap api and config machine services.

Examples

Redirect console

socat tcp4-listen:443,fork,reuseaddr tcp6-connect:[fd02:9a01::10]:443 |& logger &

Note

You need to add console-openshift-console.apps.ocp.labs.stroila.ca oauth-openshift.apps.ts25.labs.stroila.ca to /etc/hosts or dns

Redirect api and machine-config server

socat tcp6-listen:6443,fork,reuseaddr tcp6-connect:[fd02:9a01::10]:6443 |& logger &

socat tcp6-listen:22623,fork,reuseaddr tcp6-connect:[fd02:9a01::10]:22623 |& logger &

Redirect node_exporter from ipv4 to ipv6

socat tcp4-listen:9101,fork,reuseaddr tcp6-connect:[2602:807:900e:141:0:1866:dae6:4674]:9100 |& logger &

socat tcp4-listen:9102,fork,reuseaddr tcp6-connect:[2602:807:900e:141:0:549f:3509:8eac]:9100 |& logger &

socat tcp4-listen:9103,fork,reuseaddr tcp6-connect:[2602:807:900e:141:0:90b1:1c26:6aa2]:9100 |& logger &

Fake local DNS service

socat udp6-recvfrom:53,fork tcp:localhost:5353 |& logger &

socat tcp6-listen:5353,reuseaddr,fork udp6-sendto:[2602:807:900e:141:0:f01f:afce:5ded]:53 |& logger &

1.5.2. Bootstrap¶

curl http://registry.ocp.labs.stroila.ca/cfg/bootstrap.ign > /boot/ignition/config.ign

touch /boot/ignition.firstboot

reboot

etcd-quorum-guard should not attempt to deploy 3 replicas if useUnsupportedUnsafeNonHANonProductionUnstableEtcd is set. As soon as the API is available from the Bootstrap node, set useUnsupportedUnsafeNonHANonProductionUnstableEtcd

oc patch etcd cluster -p='{"spec": {"unsupportedConfigOverrides": {"useUnsupportedUnsafeNonHANonProductionUnstableEtcd": true}}}' --type=merge --kubeconfig /etc/kubernetes/kubeconfig

1.5.3. Master¶

curl http://registry.ocp.labs.stroila.ca/cfg/master.ign > /boot/ignition/config.ign

touch /boot/ignition.firstboot

reboot

System firstboot followd by reboot

machine-config-daemon-pull.service

machine-config-daemon-firstboot.service

1.5.3.1. Master from Bootstrap¶

curl -k https://api-int.ocp.labs.stroila.ca:22623/config/master > master.ign

1.5.4. Decommission bootstrap¶

Upon successful deployment of the initial controllers, the bootstrap node will reach out to the active controllers to fetch the master ignition to be installed on disk.

1.6. Add workers¶

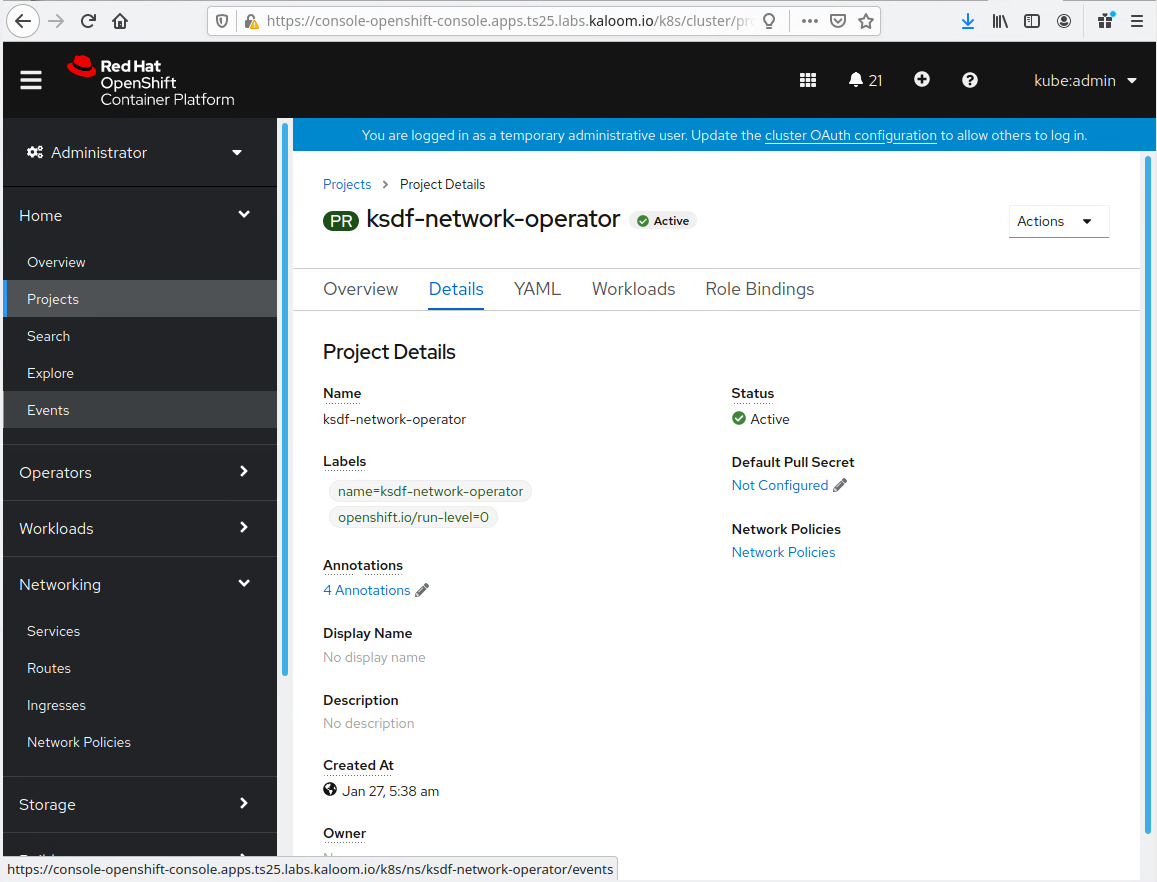

Once the basic OCP cluster is up and running, the workers will be attached to the cluster.

1.7. Deploy fabric services¶

When the basic OCP deplyment is completed the fabric deployment services will be initiated.

1.8. Node Update¶

Providing the first node provisioning has basic RHCOS installed, iPXE chain load can be used to trigger node reinstallation using VIM services.

1.8.1. iPXE¶

Embedding via the command line

- For systems that do not have IPMI support there is a need for a method to trigger a fresh install especially for CICD use case.

This could be achived by embedding via the command line a script into an iPXE image loaded by GRUB:

timeout 10

default 0

title iPXE

kernel (hd0,0)/ipxe.lkrn dhcp && chain http://vimserver/boot.php

This uses a standard version of the iPXE binary ipxe.lkrn. You can change the embedded script simply by editing the GRUB configuration file, with no need to rebuild the iPXE binary. This method works only with iPXE binary formats that support a command line, such as .lkrn.

Note

You may need to escape some special characters to allow them to be passed through to iPXE.

Embedding via an initrd

You can embed a script by passing in an initrd to iPXE. For example, to embed a script saved as myscript.ipxe into an iPXE image loaded by GRUB:

timeout 10

default 0

title iPXE

kernel (hd0,0)/ipxe.lkrn

initrd myscript.ipxe

This uses a standard version of the iPXE binary ipxe.lkrn. The myscript.ipxe file is a plain iPXE script file; there is no need to use a tool such as mkinitrd. You can change the embedded script by editing the myscript.ipxe file, with no need to rebuild the iPXE binary. This method works only with iPXE binary formats that support an initrd, such as .lkrn.

1.9. Node Decommissioning¶

To remove a node from the cluster, node draining should follow the standard OCP procedure.